Doing networking (in Rust & With<(Bevy, Renet)>)

This is a follow-up to my initial post about “Untitled Game Project” read it here. This post details majority of what I’ve done in regards to networking. I am not an expert on the subject, and it’s possible I have made a few errors. No matter, I hope it is somewhat informative & interesting.

How does one communicate between the server & client?

For Untitled Game Project, I use a library called Renet. Renet does have a plugin for Bevy called Bevy Renet, but I opted not to use it and instead reimplement what it does for myself.

On both the client & server, the “network loop” within a single frame look something like this (where each square is a different system):

What’s a message? What’s a packet?

Throughout this post, I will refer to them with the following definitions:

- Message: The enum representation of a packet before being serialized into bytes.

- Packet: The post serialization vector of bytes sent over the net.

What does a message look like?

A message is an enum. I use Bincode to serialize these enums into packets.

For example, here is a section of the definition for reliable messages sent from the server to the client:

#[derive(Debug, Serialize, Deserialize)]

pub enum ReliableServerMessage {

CreatePlayer {

entity: Entity,

translation: [f32; 3],

rotation: f32,

name: String,

},

RemoveEntities {

entities: Vec<Entity>,

},

Move {

entity: Entity,

translation: [f32; 3],

rotation: f32, // Yaw.

},

// etc

}

The server also has a wrapper event for these called SendReliableMessage. These events are individually serialized and sent to their MessageTarget(s).

pub struct SendReliableMessage {

pub target: MessageTarget,

pub message: ReliableServerMessage,

}

pub enum MessageTarget {

Single(Entity),

// Entities who see the passed in entity.

Seeing(Entity),

// etc

}

To serialize a ReliableServerMessage we call Bincode:

let packet = bincode::serialize(&message).unwrap();

And then we push the returned Vec<u8> to renet.

How to refer to entities across the net?

I send the server’s Entity Id to the client, and then the client saves that for when it needs to convert back & forth. I have a RemoteId component and a RemoteToLocal resource for this purpose:

#[derive(Component)]

pub struct RemoteId(pub Entity);

#[derive(Default)]

struct RemoteToLocal(HashMap<Entity, Entity>);

It might not work out in the long term, but for now I think it’s fine.

Determining Network Relevancy.

We don’t want to send the state of the entire world to every client at all times, players don’t need to know the movement of that bear on the otherside of the world, it doesn’t matter for them. And why would we send something that’s irrelevant to the experience?

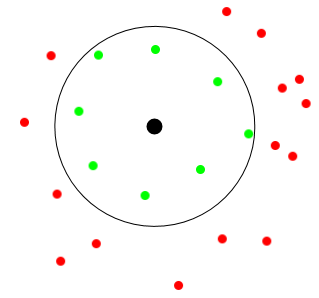

Instead we could replicate only entities within a certain distance of the player.

Any entity within the circle is sent to the client.

We’ll call this strategy Player-Out because I don’t know if anyone else has named it.

Player-Out works until we start having some size differences between entities. Ask yourself, does it make sense to replicate chickens at the same distances as dragons? The chicken is going to be irrelevant long before the dragon.

You'll probably lose track of the chicken before the dragon. Screenshot from Blizzard's World of Warcraft

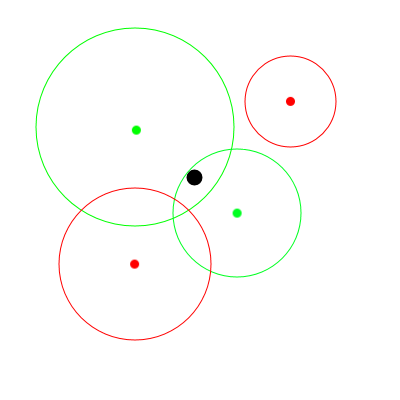

So we reverse it. Each replicated entity instead searches for players within their own individual replication range. These entities will then tell the player that they are relevant to it. This technique we’ll call Player-In.

Each entity now has it's own replication range and informs the player when it becomes relevant or loses relevance.

There is a caveat to Player-In, which makes it slightly more complicated to work with: Since the entities themselves tell players if they gain or lose relevance, we need to handle the case where an entity is deleted. In that case, the entity can’t tell players it’s lost relevance (since it has been deleted).

My current solution for this is to emit an event in the spots where a replicated entity is deleted which is fine currently when I only have player characters but will become increasingly annoying as entities are deleted for different reasons.

I think the best way for this to be solved would be by having a way to access the relevant data on deleted entities. This is not a feature offered by Bevy yet. Maybe in the future.

How do you figure out what’s in range?

KD-Trees. I use Bevy Spatial, all entities with the Player component are put into a KD-Tree and when updating network relevance we query that KD-Tree for all entities within replication range. It’s simple, it works, and it’s fast.

I love open-source libraries.

These packets take up too much bandwidth.

If we look back at ReliableServerMessage::Move and do some math for the resulting packet size we get: 1 (Message Type) + 8 (Entity) + 4 * 3 (Translation) + 4 (Rotation) = 25 bytes. Let’s say we send moves 20 times per second. That’s 500 bytes per second per moving entity or 4 kilobit/s each. How many characters can you expect to have around you in an RPG?

This is a screenshot from Obsidian's The Outer Worlds.

But that’s just the ones immediately visible I know there are more NPCs just behind the walls, which are still in network range, so let’s double that as our NPC estimate: 480 * 42 = 21 000 bytes or 168 kb/s per player.

We could also go the Valorant route of not replicating obscured entities but that's more effort.

If we get into combat, we’ll also need to send at the current health. Let’s say 1/3rd of the characters’ healths change in the time between us sending packets, that’s (1 (Message Type) + 8 (Entity) + 4 (health as float)) * 20 * 14 (Characters that changed) = 3 640 bytes. In total, we are now at 197.12 kb/s per player.

That’s not a lot for today, only ~0.19 Mb/s, but we could do better a lot better. Let’s not settle for “okay” bandwidth usage.

Shrinking the packets.

ReliableServerMessage::Move takes up a large majority (~85%) of our bandwidth. Let’s take another look at that message.

Move {

entity: Entity,

translation: [f32; 3],

rotation: f32, // Yaw.

}

We don’t really need a float for rotation if we can accept a precision of 0.1 degrees, we can fit our 360 degrees of rotation into a u16:

let quantized_rotation = (rotation_as_degrees * 10.0) as u16; // On the client side we divide by 10.0 instead. You likely want to store the 10.0 as a constant somewhere.

That saves 2 bytes. We are down to 183,68 kb/s!

Now, translation. At the scale of 1 unit = 1 m, we’ll be fine with only 0.01 units or 1 cm precision. I doubt anyone will notice. But we can’t just quantize the translation like we do rotation. We’d limit ourselves to just 655.36 meters of playspace with a u16 (216 / 100). That’s not even 6 American football fields (or 41 chunks in Minecraft)!

Luckily, someone else had this problem before and came up with a solution:

Delta Compression.

Instead of sending the full translation of the player, we could send the difference between the last network update and now. We can have our players moving at multiple times the speed of an Olympic sprinter and only need 1 byte to encode the delta.

We only need to store whatever transform we last sent in the quantized format:

pub struct ReplicatedTransform {

pub translation: IVec3,

pub rotation: i16,

}

Why store it quantized? To prevent any drift from moving <1 cm in a tick.

Initially I saved the unquantized version, this caused characters to drift as we accumulated missed millimeters.

And then calculate the delta as such:

let quantized_position = transform.translation.div(TRANSLATION_PRECISION).as_ivec3();

let quantized_delta = quantized_position - replicated_transform.translation;

Wait a second those aren’t i8!

Correct. Remember several paragraphs back when I mentioned Bincode? We can tell it to encode integers in the smallest possible size automatically. This is called VarintEnconding. It’s not always perfectly efficient, but it’s good enough overall.

The packet we send then becomes:

MoveDelta {

entity: Entity,

x: i32,

y: i32,

z: i32,

rotation: i16,

}

If we also switch to sending the rotation delta. This usually ends up being only 5 bytes. 1 (Message-Id) + 1 (Entity) + 1 (x) + 1 (y) + 1 (z) + 1 (rotation). Oh yeah, the entity also takes advantage of VarintEncoding.

Revisiting our previous math, we should now only use 42.576 kb/s per player (taking into account that with VarintEncoding the entity in the health packet also shrunk to 1 byte). That’s only 22.6% of what we started with!

So why does the network profiler say 145,6 kb/s? Why are we sending 11 bytes extra with each packet?

It might not say exactly that. Math & Networking is hard.

These packets still take up too much bandwidth, and it’s due to the header.

Renet uses UDP under the hood to communicate. UDP packets have an 8-byte header attached to them. UDP packets are also not reliable, which is important for our delta compression to function. If we miss a packet, the character is out of alignment until it next exits & enters into relevance (this is the only time we send the full translation now).

To account for the lack of reliability, Renet appends a u16 id to all packets and resends it if they aren’t acknowledged within a certain time (this is still much more efficient than a TCP header). But a u16 is only 2 bytes? Where does the last byte come from? Our good friend VarintEncoding, this is the “not always perfectly efficient” thing I mentioned before. When we get over 250, we need a byte to tell us what type the integer is.

For our 1 120 packets, we need 12 320 bytes of packet headers per second, but only because we send them all individually. We could make one big packet for each network tick, and then we only need to send 220 bytes of packet headers.

We will have to split up the serialization & send steps.

Batching Packets

To batch all messages into a single big packet, we need a buffer to fill up.

const MAX_PACKET_SIZE: usize = 1200;

pub struct PlayerPacketBuffer {

pub buffer:: Vec<u8>,

}

impl Default for PlayerPacketBuffer {

fn default() -> Self {

buffer: Vec::with_capacity(MAX_PACKET_SIZE)

}

}

What is MAX_PACKET_SIZE? The maximum size packet we can send without it being fragmented.

Also know as MTU. If we reach that value, we'll push the packet out and continue in another buffer.

Then instead of sending each individual message over to Renet once we have serialized it, we push it into the client’s PlayerPacketBuffer.

2.0.0-rc.2.

On the clientside deserializing packets ends up looking like this:

while let Some(packet) = client.receive_message(ServerChannel::ReliableActions.id()) {

let mut cursor = 0;

let max_index = packet.len();

while cursor < max_index {

let result: Result<(ReliableServerMessage, usize), DecodeError> =

bincode::decode_from_slice(&packet[cursor..max_index], options);

// Deal with packet here.

}

}

Another advantage of batching packets is that we could apply zstd compression on the big buffer and probably save additional bandwidth. I’m not doing that yet, though maybe once this is a bit further through development.

Interpolating movement on the client.

Until making the above reworks, I thought I had a “good-enough” system for movement interpolation. I took the time the packets arrived at face value and then used that time to interpolate between them. The server never told the client the time when the packets were sent (because of bandwidth overhead!). This isn’t a good idea things will start going wrong when packets arrive too late, and timings get inconsistent.

But if we have this big buffer of a bunch of packets, we can cheaply stamp the server time onto the end of that buffer then we can use that time when interpolating.

And somewhere when a client connects, we'll figure out the server time.

The algorithm for interpolation becomes quite simple, represented in semi-pseudocode:

while has_another_snapshot() {

let next_snapshot = get_next_snapshot();

let target_translation = next_snapshot.translation;

let target_rot = next_snapshot.rotation;

let target_server_time = next_snapshot.server_time_millis;

if current_time > target_server_time {

current_snapshot = next_snapshot;

transform.translation = target_translation;

transform.rotation = target_rotation;

remove_next_snapshot_from_queue();

continue;

}

let progress = (current_time - current_snapshot.arrival_time) / (target_server_time - current_snapshot.arrival_time);

transform.translation = lerp(

current_snapshot.translation,

target_translation,

progress,

);

transform.rotation = lerp(

current_snapshot.rotation,

target_rot,

progress,

);

break;

}

If a packet is a bit late, we’ll still get to the correct interpolated position for that time, with no speeding up or slowing down due to uneven packet times. There may be a bit of a snap into the correct translation, but I at least feel that to be preferable to having an uneven speed.

Jitter buffer.

If a packet is a bit late, we’ll still get to the correct interpolated position for that time, with no speeding up or slowing down due to uneven packet times. There may be a bit of a snap into the correct translation, but I at least feel that to be preferable to having characters speed up and slow down.

const TIME_BETWEEN_NETWORK_TICKS: u32 = 50;

const JITTER_BUFFER: u32 = 25; // I have been keeping this at 1/2 of TIME_BETWEEN_NETWORK_TICKS when testing locally. It's possible you need more in real network conditions.

const BUFFER_TIME: u32 = TIME_BETWEEN_NETWORK_TICKS + JITTER_BUFFER; // We are always offset by this time so we can: 1) Always have another snapshot to interp towards. 2) Cover up any network "jitter".

This isn’t the best way to do it, but I’m not making a competitive shooter like Overwatch. If you want a better way of doing this, you should have a gander at Blizzard’s “Overwatch Gameplay Architecture and Netcode” GDC talk (if you can find it, as it was removed from the GDC youtube channel last year).

Rounding up.

I only run “network ticks” 10 times per second and use a JITTER_BUFFER_TIME_MILLIS of 150. I think it works quite well. Networking is an interesting subject. I’ve tried to cover it in the order I figured things out. I’ve been lurking quite a bit in the network channel on the Bevy Discord. It’s very useful for learning. I also recommend the mentioned Overwatch GDC talk & Snapshot Compression (Gaffer On Games).

All the networking related systems make quite good use of change detection which, I again neglected to mention, but my movement replication system looks like this on the server-side:

pub fn network_send_delta_position_system(

mut messages: EventWriter<SendReliableMessage>,

mut query: Query<(Entity, &Transform, &mut ReplicatedTransform), Changed<Transform>>,

) {

for (entity, transform, mut replicated_transform) in query.iter_mut() {

let quantized_rotation = (transform

.rotation

.to_euler(bevy::prelude::EulerRot::XYZ)

.1

.to_degrees()

/ ROTATION_PRECISION) as i16; // ROTATION_PRECISION == 0.1

let quantized_position = transform.translation.div(TRANSLATION_PRECISION).as_ivec3(); // TRANSLATION_PRECISION == 0.01

let delta_translation = quantized_position - replicated_transform.translation;

let delta_rotation = quantized_rotation - replicated_transform.rotation;

if delta_rotation != 0 || delta_translation != IVec3::ZERO {

messages.send(SendReliableMessage {

target: MessageTarget::Seeing(entity),

message: ReliableServerMessage::MoveDelta {

entity,

x: delta_translation.x,

y: delta_translation.y,

z: delta_translation.z,

rotation: delta_rotation,

},

});

}

replicated_transform.translation = quantized_position;

replicated_transform.rotation = quantized_rotation;

}

}

Where it only looks at entities whose transform has been mutably dereferenced. It’s fantastic. No need to mark the transform as dirty everywhere it’s changed or check every entity every tick. Bevy simply handles it for me.

Written 15 Oct 2022 by Grim